In addition to Weibo, there is also WeChat

Please pay attention

WeChat public account

Shulou

2026-02-12 Update From: SLTechnology News&Howtos shulou NAV: SLTechnology News&Howtos > Development >

Share

Shulou(Shulou.com)06/02 Report--

< C(b),则有a发生在b之前(happened before),记作 a ->B, for example, there is C1-> B1 in figure 1. Through this definition, events with different Lamport timestamps in the event set can be compared, and the [https://en.wikipedia.org/wiki/Partially_ordered_set#Formal_definition)(partial order] of the event is obtained. If C (a) = C (b), what is the order of events an and b? Suppose that an and b occur on nodes P and Q, respectively, and Pi and Qj denote the numbers we give to P and Q, respectively. If C (a) = C (b) and Pi j, also defined as an occurring before b, it is marked as a = > b. If we number A, B and C in figure 1 as Ai = 1, Bj = 2, Ck = 3, because C (B4) = C (C3) and Bj

< Ck,则 B4 =>C3 . Through the above definition, we can sort all events and obtain the https://en.wikipedia.org/wiki/Total_order)(total order of events. For the example above, we can sort from C1 to A4. * * Vector clock**Lamport timestamps help us to get event sequence relationships, but there is another sequence relationship that cannot be well represented by Lamport timestamps, that is, simultaneous relationships (concurrent) [4]. For example, in figure 1, event B4 and event C3 have no causal relationship and belong to simultaneous events, but the Lamport timestamp defines that the two are in sequence. Vector clock is another logical clock method based on Lamport timestamp. It records not only the Lamport timestamp of this node but also the Lamport timestamp of other nodes through vector structure. The principle of Vector clock is similar to Lamport timestamp, using the following illustration:! [] (https://images2015.cnblogs.com/blog/116770/201605/116770-20160502134654404-1109556515.png)_ figure 2: Vector clock space time (_ image source: wikipedia) _ _ assume that events an and b occur on node P and Q, respectively, and Vector clock is Ta and Tb, respectively. If Tb [Q] > Ta [Q] and Tb [P] > = Ta [P], then an occurs before b. Write it down as a-> b. So far, it's not much different from the Lamport timestamp, so how can Vector clock tell if there is a relationship at the same time? If Tb [Q] > Ta [Q] and Tb [P]

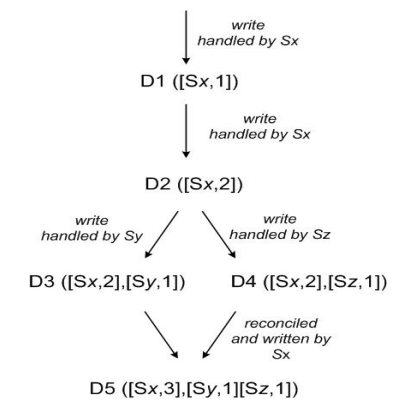

< Ta[P],则认为a、b同时发生,记作 a b。例如图2中节点B上的第4个事件 (A:2,B:4,C:1) 与节点C上的第2个事件 (B:3,C:2) 没有因果关系、属于同时发生事件。**Version vector**基于Vector clock我们可以获得任意两个事件的顺序关系,结果或为先后顺序或为同时发生,识别事件顺序在工程实践中有很重要的引申应用,最常见的应用是发现数据冲突(detect conflict)。分布式系统中数据一般存在多个副本(replication),多个副本可能被同时更新,这会引起副本间数据不一致[7],Version vector的实现与Vector clock非常类似[8],目的用于发现数据冲突[9]。下面通过一个例子说明Version vector的用法[10]:_图3: Version vector_* client端写入数据,该请求被Sx处理并创建相应的vector ([Sx, 1]),记为数据D1* 第2次请求也被Sx处理,数据修改为D2,vector修改为([Sx, 2])* 第3、第4次请求分别被Sy、Sz处理,client端先读取到D2,然后D3、D4被写入Sy、Sz* 第5次更新时client端读取到D2、D3和D4 3个数据版本,通过类似Vector clock判断同时发生关系的方法可判断D3、D4存在数据冲突,最终通过一定方法解决数据冲突并写入D5Vector clock只用于发现数据冲突,不能解决数据冲突。如何解决数据冲突因场景而异,具体方法有以最后更新为准(last write win),或将冲突的数据交给client由client端决定如何处理,或通过quorum决议事先避免数据冲突的情况发生[11]。由于记录了所有数据在所有节点上的逻辑时钟信息,Vector clock和Version vector在实际应用中可能面临的一个问题是vector过大,用于数据管理的元数据(meta data)甚至大于数据本身[12]。解决该问题的方法是使用server id取代client id创建vector (因为server的数量相对client稳定),或设定最大的size、如果超过该size值则淘汰最旧的vector信息[10][13]。**小结**以上介绍了分布式系统里逻辑时钟的表示方法,通过Lamport timestamps可以建立事件的全序关系,通过Vector clock可以比较任意两个事件的顺序关系并且能表示无因果关系的事件,将Vector clock的方法用于发现数据版本冲突,于是有了Version vector。## 分布式系统理论基础 - CAP**引言**CAP是分布式系统、特别是分布式存储领域中被讨论最多的理论,"[什么是CAP定理?](https://www.quora.com/What-Is-CAP-Theorem-1)"在Quora 分布式系统分类下排名 FAQ 的 No.1。CAP在程序员中也有较广的普及,它不仅仅是"C、A、P不能同时满足,最多只能3选2",以下尝试综合各方观点,从发展历史、工程实践等角度讲述CAP理论。希望大家透过本文对CAP理论有更多地了解和认识。**CAP定理**CAP由[Eric Brewer](https://en.wikipedia.org/wiki/Eric_Brewer_(scientist))在2000年PODC会议上提出[1][2],是Eric Brewer在Inktomi[3]期间研发搜索引擎、分布式web缓存时得出的关于数据一致性(consistency)、服务可用性(availability)、分区容错性(partition-tolerance)的猜想:> < z'.e 或者 z.e = z'.e && z.c < z'.c 时,定义z先于z'发生(z < z')。为实现PO,Zab对Follower、Leader有以下约束:有事务z和z',如果Leader先广播z,则Follower需保证先commit z对应的事务有事务z和z',z由Leader p广播,z'由Leader q广播,Leader p先于Leader q,则Follower需保证先commit z对应的事务有事务z和z',z由Leader p广播,z'由Leader q广播,Leader p先于Leader q,如果Follower已经commit z,则q需保证已commit z才能广播z'第1、2点保证事务FIFO,第3点保证Leader上具备所有已commit的事务。相比Paxos,Zab约束了事务顺序、适用于有强一致性需求的场景。Paxos、Raft、Zab再比较除Paxos、Raft和Zab外,Viewstamped Replication(简称VR)[7][8]也是讨论比较多的一致性协议。这些协议包含很多共同的内容(Leader、quorum、state machine等),因而我们不禁要问:Paxos、Raft、Zab和VR等分布式一致性协议区别到底在哪,还是根本就是一回事?[9]Paxos、Raft、Zab和VR都是解决一致性问题的协议,Paxos协议原文倾向于理论,Raft、Zab、VR倾向于实践,一致性保证程度等的不同也导致这些协议间存在差异。下图帮助我们理解这些协议的相似点和区别[10]:相比Raft、Zab、VR,Paxos更纯粹、更接近一致性问题本源,尽管Paxos倾向理论,但不代表Paxos不能应用于工程。基于Paxos的工程实践,须考虑具体需求场景(如一致性要达到什么程度),再在Paxos原始语意上进行包装。小结以上介绍分布式一致性协议Raft、Zab的核心思想,分析Raft、Zab与Paxos的异同。实现分布式系统时,先从具体需求和场景考虑,Raft、Zab、VR、Paxos等协议没有绝对地好与不好,只是适不适合。分布式系统理论进阶 - Paxos变种和优化引言《分布式系统理论进阶 - Paxos》中我们了解了Basic Paxos、Multi Paxos的基本原理,但如果想把Paxos应用于工程实践,了解基本原理还不够。有很多基于Paxos的优化,在保证一致性协议正确(safety)的前提下,减少Paxos决议通信步骤、避免单点故障、实现节点负载均衡,从而降低时延、增加吞吐量、提升可用性,下面我们就来了解这些Paxos变种。Multi Paxos首先我们来回顾一下Multi Paxos,Multi Paxos在Basic Paxos的基础上确定一系列值,其决议过程如下:phase1a: leader提交提议给acceptorphase1b: acceptor返回最近一次接受的提议(即曾接受的最大的提议ID和对应的value),未接受过提议则返回空phase2a: leader收集acceptor的应答,分两种情况处理 phase2a.1: 如果应答内容都为空,则自由选择一个提议value phase2a.2: 如果应答内容不为空,则选择应答里面ID最大的提议的valuephase2b: acceptor将决议同步给learnerMulti Paxos中leader用于避免活锁,但leader的存在会带来其他问题,一是如何选举和保持唯一leader(虽然无leader或多leader不影响一致性,但影响决议进程progress),二是充当leader的节点会承担更多压力,如何均衡节点的负载。Mencius[1]提出节点轮流担任leader,以达到均衡负载的目的;租约(lease)可以帮助实现唯一leader,但leader故障情况下可导致服务短期不可用。Fast Paxos在Multi Paxos中,proposer ->Leader-> acceptor-> learner, after three communications from the proposal to the completion of the resolution, can you reduce the communication steps? For Multi Paxos phase2a, if you are free to propose value, you can let proposer initiate the proposal directly and leader quit the communication process and change to proposer-> acceptor-> learner. This is the origin of Fast Paxos [2]. All the proposals in Multi Paxos are put forward by leader, so there is no direct proposal by proposer in multiple value,Fast Paxos in one resolution, and there may be multiple proposer proposals and multiple value in one resolution, that is, there is a proposal conflict (collision). Leader plays the role of initializing the resolution process (progress) and resolving conflicts. When a conflict occurs, leader re-participates in the resolution process and falls back to three communication steps. A feature implied by Paxos itself can also achieve the goal of reducing communication steps. If the last chosen proposal of acceptor comes from proposerA, the current resolution proposerA can directly propose a reduction of communication steps. If you want to achieve this effect, you need to record the history of the last decision (chosen) in proposer and acceptor, in order to know which proposer's proposal was decided last time before the proposal, and whether the current resolution can save a communication step. In addition to improving the efficiency of Paxos resolution from the point of view of reducing communication steps, EPaxos can also reduce the delay of Paxos resolution. For example, Generalized Paxos [3] proposes that non-conflicting proposals (such as write requests for different key) can be resolved at the same time to reduce Paxos delay. Furthermore, EPaxos [4] (Egalitarian Paxos) proposes a Paxos optimization method that not only supports the submission of non-collision proposals to reduce the delay, but also balances the load of each node and minimizes the communication steps. To achieve these goals, there are several key points in the implementation of EPaxos. First, there is no global leader in the EPaxos, but the proposer of each proposal is the leader (command leader) of the current proposal; the second is that the proposal without interfere can be submitted at the same time; the third is to skip the prepare and directly enter the accept stage. The process of EPaxos resolution is as follows: the resolution process of two update requests that do not affect each other is shown on the left, and the resolution of two update requests that influence each other is shown on the right. Multi Paxos, Mencius, EPaxos latency and throughput comparison: in order to determine whether decisions affect each other, EPaxos can record the dependency between decisions. Summary several variants based on Paxos are introduced above. In Mencius, nodes take turns to do leader and balance node load, Fast Paxos reduces one communication step, Generalized Paxos allows decisions that do not affect each other at the same time, EPaxos has no global leader, and all nodes share the load equally. Optimization is endless, as for Paxos, Paxos variants and optimizations applied in different scenarios and different ranges will continue to emerge. "how to understand web distributed systems" is introduced here, thank you for reading. If you want to know more about the industry, you can follow the website, the editor will output more high-quality practical articles for you!

Welcome to subscribe "Shulou Technology Information " to get latest news, interesting things and hot topics in the IT industry, and controls the hottest and latest Internet news, technology news and IT industry trends.

Views: 0

*The comments in the above article only represent the author's personal views and do not represent the views and positions of this website. If you have more insights, please feel free to contribute and share.

The market share of Chrome browser on the desktop has exceeded 70%, and users are complaining about

The world's first 2nm mobile chip: Samsung Exynos 2600 is ready for mass production.According to a r

A US federal judge has ruled that Google can keep its Chrome browser, but it will be prohibited from

Continue with the installation of the previous hadoop.First, install zookooper1. Decompress zookoope

About us Contact us Product review car news thenatureplanet

More Form oMedia: AutoTimes. Bestcoffee. SL News. Jarebook. Coffee Hunters. Sundaily. Modezone. NNB. Coffee. Game News. FrontStreet. GGAMEN

© 2024 shulou.com SLNews company. All rights reserved.